¶ Metadata Provider

The metadata provider is a component collecting metadata about the states and metrics of trains and sending them to a central metadata store, where they can be globally accessed.

For this, it relies on the padme metadata schema, available here.

It uses rdf as an universal language for describing information and knowledge.

The metadata service allows users of trains to see almost real time metrics and state changes of their trains and gives them insight into the performance.

In the future, metadata will also include static information, making the stations more findable and accessible.

Additional information about the metadata architecture can be found here

Setup of the metadata provider is part of the general setup. For this please refer to initial-station-setup

¶ Metadata collection on the station side

Currently, two types of metadata are collected from the station: States and metrics.

The states are directly communicated to the metadata provider from the station software. This includes state changes such as “Train started running” or “Started downloading Train”.

The second type of metadata, metrics, is collected from the docker engine. This includes information such as the memory consumption of a train.

Both types of metadata are expressed with “Events”, describing a piece of metadata at a specific point in time.

The metadata provider buffers those events and periodically signs them with a secret key before sending it to the metadata store

¶ Metadata store

The metadata store persistently collects all metadata produced by station.

To validate the origin of metadata, it only accepts metadata signed with the correct secret key. This hinders that entities can wrongfully inject metadata describing other components.

The metadata is processed into documents, describing the component in linked-data fashion.

The documents are presented under well-meaning URL, for example the document describing a Station is available under its IRI.

Currently, there is no access controll regarding viewing the documents. However, currently, only non-sensitive information is collected.

¶ Metadata Store API

Information from the store can be retrieved via two ways: In the form of documents or via a SPARQL interface.

The documents can be retrieved via each stations URL. For information that can be contained in the documents consult https://schema.padme-analytics.de.

The SPARQL interface resides at https://metadata.padme-analytics.de/entities/query.

See https://www.w3.org/TR/sparql11-overview/ for information about the SPARQL query language.

¶ Security

As already mentioned, spoofing components is prevented by a shared secret which only the central store and the corrosponding metadata provider has. This secret is communicated during the setup process of a station and can be set manually.

The communication of the secret is realised with a one-time use token, injected into the stations configuration file during onboarding, preventing all entities in setting the secret key for the station but the owner of the configuration file.

To control which kind of metadata is communicate, a filter list is contained in the provider. This list can be configured during the installation process. If the list is set as an allow list, only events in this list are sent to the store.

Otherwise, all events but those in the list are sent. In this filter list, events are identified with their class URL, which can be found on the padme schema page.

If one do not want to provide metadata, we advise setting the filter to an allow list during the setup and keeping the list empty, prohibiting all events.

Note: To set the filter list initially, you need to press the “set filterlist” button, even if nothing is changed during the setup dialog. Otherwise, the filterlist will be kept uninitialised, allowing every event.

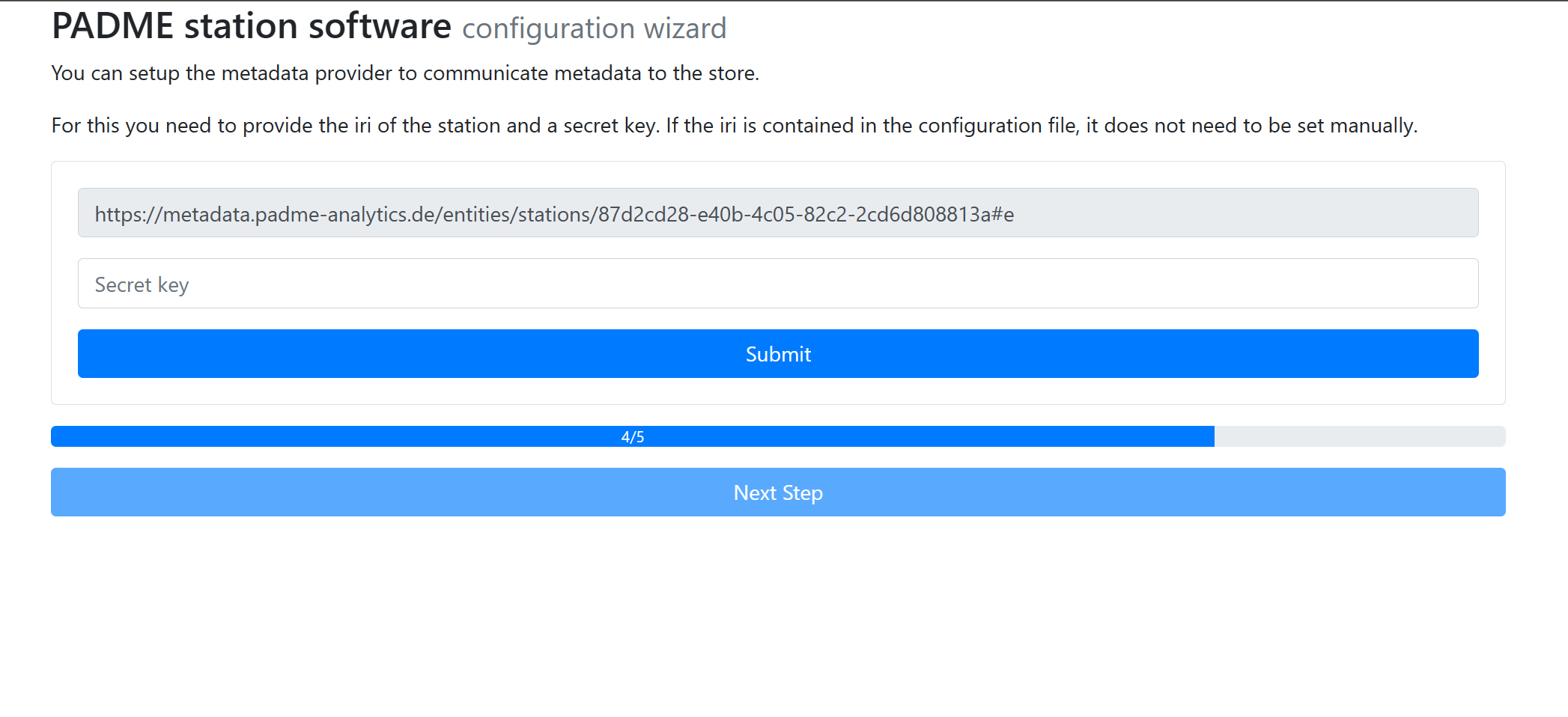

The initial setup view for the metadata system can be seen below: