The work presented here is a proof-of-concept and by no means is ready-for-production use.

¶ Overview

The integration of Eclipse Data Components (EDC) into the PADME ecosystem makes it easier to securely share analysis results. By registering these results as assets within the EDC framework, researchers can negotiate and share them with other partners in the data space. This approach keeps PADME’s privacy-first model intact while taking advantage of EDC’s secure and standardized data-sharing capabilities.

¶ Methodology

In line with our objective of not only sharing data with partners in the data space but also consuming data from them, two key roles were identified within the PADME ecosystem. The first role is that of a data provider, where researchers can share their insights with other potential partners in the data space after performing DA using PADME. The second role is that of a data consumer, where researchers can request and consume insights generated by other partners in the data space for further analysis within the PADME framework. To facilitate this, the EDC framework supports two distinct modes for data transfer between partners: Provider Push, where data is actively sent by the provider, and Consumer Pull, where data is requested and retrieved by the consumer.

¶ Provider Push

The provider EDC fetches the data from its own backend and pushes it to the consumer’s desired data sink. The data sink should be an HTTP endpoint that can be accessed by the provider connector. This mode is useful when data can be pushed in one go or in a batch process and It is efficient for scenarios where the data provider has control over when and how data is extracted from its backend systems and can deliver it to consumers in a timely manner.

¶ Consumer Pull

Upon consumer’s transfer request the data provider exposes credentials to access the data. Data consumer actively pulls the data by using the provided credentials. This mode gives consumers more control over when and what data they receive, making it suitable for scenarios where consumers need specific datasets on demand or at regular intervals.

¶ PADME as Data Provider

To initiate DA experiments in PADME, researchers can use the PADME Central Service with EDC deployed alongside it. After selecting the analysis train and station route, the analysis can be started. The researcher can view the analysis results and download them after the analysis finishes execution on the Stations. Based on the significance of the results, the researcher may decide to share them with other research organizations. This is where PADME utilizes EDC connectors to register analysis results and create contracts so other organizations in the dataspace can discover and use these results for research purposes.

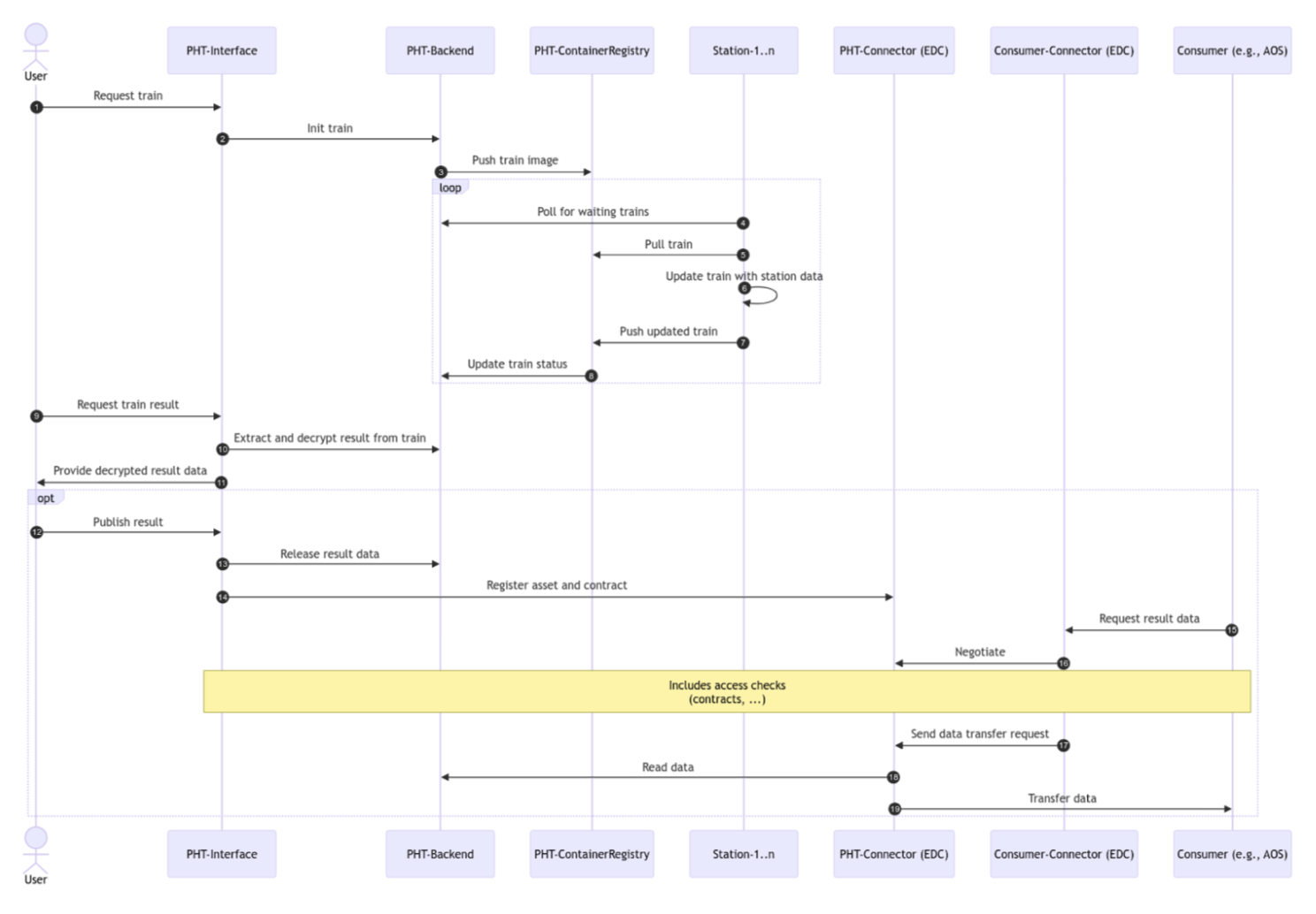

Following is a sequence diagram of the interaction between Central Service and EDC Connector.

To use EDC connector, we first need to deploy it alongside the central service. We do this in the docker compose file by adding the sovity’s EDC CaaS as follows. More information can be found here.

services:

centralservice:

...

environment:

...

EDC_HOST: pht-edc

EDC_PORT: 11002

EDC_MANAGEMENT_API_KEY: ApiKeyDefaultValue # should be changed

EDC_API_AUTH_KEY: SecureAPIAuthKey # should be changed

...

postgresql-edc:

image: docker.io/bitnami/postgresql:11

restart: unless-stopped

container_name: postgresql-edc

environment:

POSTGRESQL_USERNAME: edc

POSTGRESQL_PASSWORD: edc

POSTGRESQL_DATABASE: edc

ports:

- "54321:5432"

volumes:

- "postgresql-edc-data:/bitnami/postgresql"

networks:

centernetwork:

pht-edc:

image: ghcr.io/sovity/edc-dev:5.0.0

container_name: pht-edc

depends_on:

- postgresql-edc

environment:

VIRTUAL_PORT: 11003

VIRTUAL_HOST: "connector.${SERVICE_DOMAIN}, www.connector.${SERVICE_DOMAIN}"

LETSENCRYPT_HOST: "connector.${SERVICE_DOMAIN}, www.connector.${SERVICE_DOMAIN}"

MY_EDC_NAME_KEBAB_CASE: "central-service-edc"

MY_EDC_TITLE: "Central Service EDC"

MY_EDC_DESCRIPTION: "sovity Community Edition EDC Connector"

MY_EDC_CURATOR_URL: "https://example.com"

MY_EDC_CURATOR_NAME: "Example GmbH"

MY_EDC_MAINTAINER_URL: "https://sovity.de"

MY_EDC_MAINTAINER_NAME: "sovity GmbH"

MY_EDC_FQDN: connector.${SERVICE_DOMAIN}

EDC_API_AUTH_KEY: ApiKeyDefaultValue # should match 'EDC_MANAGEMENT_API_KEY' in centralservice

MY_EDC_JDBC_URL: jdbc:postgresql://postgresql-edc:5432/edc

MY_EDC_JDBC_USER: edc

MY_EDC_JDBC_PASSWORD: edc

MY_EDC_PROTOCOL: "https://"

EDC_DSP_CALLBACK_ADDRESS: https://connector.${SERVICE_DOMAIN}/api/dsp

networks:

proxynet:

centernetwork:

...

¶ PADME as Data Consumer

In the second use case, our objective was to enable researchers within the PADME ecosystem to access and consume data provided by other data sources within the data space. Initially, we considered using Sovity’s EDC Connector; however, we encountered limitations as the Consumer Pull data transfer capability is only available in the paid version. Therefore, we decided to use the original EDC Connector provided by the Eclipse Foundation, which required additional effort for integration into PADME. After some trial and error, we successfully set up a Consumer Connector within the PADME ecosystem which was able to parse data catalogs of other connectors in the data space, negotiate contracts, and consume data for analysis. After a contract negotiation takes place, temporary credentials for data access are provided by PADME’s Consumer Connector. These credentials are then utilized by the analysis algorithm to access the necessary data on demand, enabling the execution of the analysis.

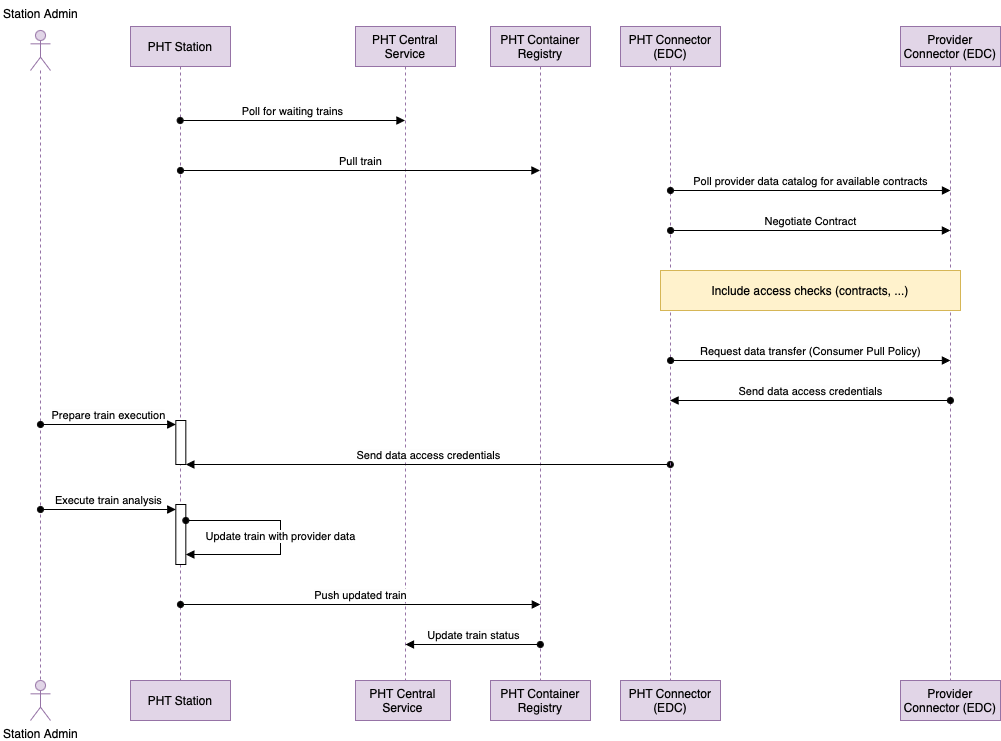

Following is a sequence diagram of the interaction between Station Software and EDC Connector.

To use EDC connector as a consumer, we deploy it alongside the PADME Station Software. We do this in the docker compose file as follows. More information can be found here.

services:

pht-web:

...

environment:

...

EDC_HOST=consumer

EDC_PORT=29193

EDC_MANAGEMENT_API_KEY=password # should be changed

EDC_API_AUTH_KEY=password # should be changed

...

consumer:

image: "repository.padme-analytics.de/stationsoftware/edc:main-fairds"

entrypoint: java -jar /app/connector.jar

environment:

EDC_PARTICIPANT_ID: consumer

EDC_HOSTNAME: consumer

WEB_HTTP_PORT: 29191

WEB_HTTP_PATH: /api

WEB_HTTP_PUBLIC_PORT: 29291

WEB_HTTP_PUBLIC_PATH: /public

WEB_HTTP_CONTROL_PORT: 29192

WEB_HTTP_CONTROL_PATH: /control

WEB_HTTP_MANAGEMENT_PORT: 29193

WEB_HTTP_MANAGEMENT_PATH: /management

WEB_HTTP_PROTOCOL_PORT: 29194

WEB_HTTP_PROTOCOL_PATH: /protocol

EDC_CONTROL_ENDPOINT: http://consumer:29192/control

EDC_DSP_CALLBACK_ADDRESS: http://consumer:29194/protocol

EDC_API_AUTH_KEY: password # should match 'EDC_MANAGEMENT_API_KEY' in pht-web service

EDC_KEYSTORE: /app/certs/cert.pfx

EDC_KEYSTORE_PASSWORD: 123456 # should be changed

EDC_VAULT: /app/configuration/consumer-vault.properties

EDC_FS_CONFIG: /app/configuration/consumer-configuration.properties

networks:

- pht-net

...